Freedom of speech is a great thing. The problem is that when people are given that freedom, they tend to use it to say dumb stuff and say nasty things to other people. And it’s because of all this hateful and mostly misleading communication that social media sites like Facebook need to employ full time moderators to help keep their platform under control. Although it might appear to most of us that the staff are not doing much, the truth is that Facebook’s 15,000 moderators are some of the hardest working people in the company. Yes, that’s right the company employs an army of mods and they still can’t catch all the harmful things people keep saying on a daily basis. It’s simply too much.

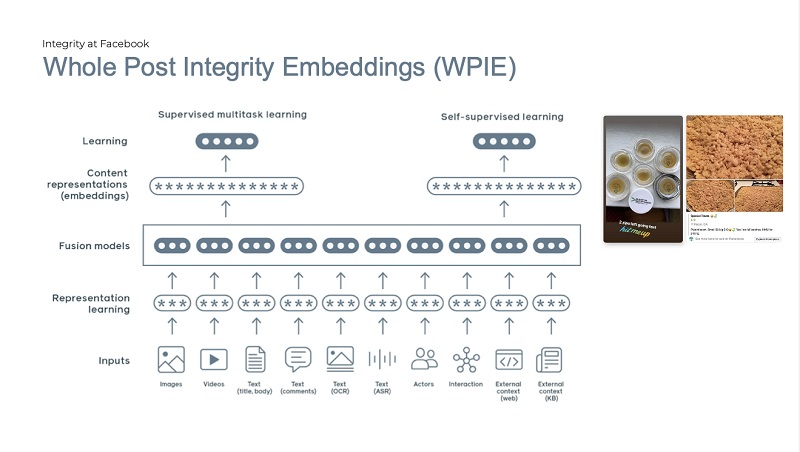

The company has for many years utilised some form of machine learning to try and proactively identify this content, but it still takes far too much time for human reviews to search through it all. Facebook has announced that it is making some changes and introducing a little more AI into the mix to make that moderation easier.

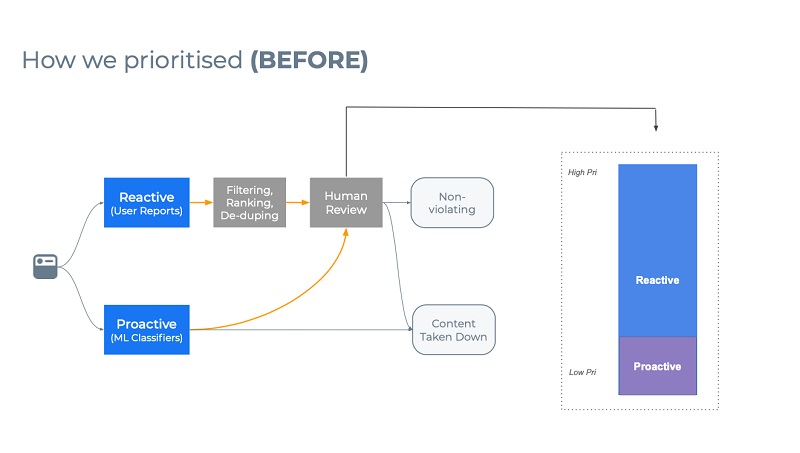

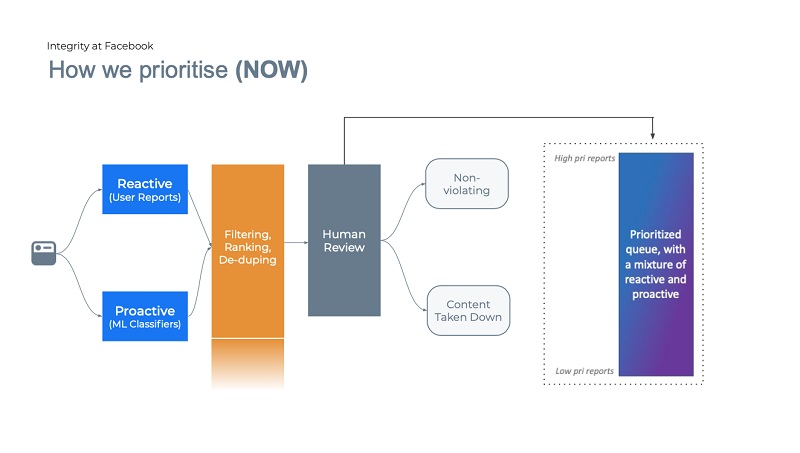

Whereas previously the human moderators would simply receive all content that was either flagged by users or the machine learning scripts, the company is now going to be using AI to prioritise the content so that moderators can get to the most important content first. The new script will also learn from the responses by the human moderator to further refine its prioritisation and work towards flagging the most critical stuff quickly. Thus ensuring that abusive and harmful content is dealt with faster than before.

How the new scripts will prioritise content is not immediately clear, though previously Facebook has shared how it uses Machine Learning to analyse posts and look at things like the language used, the validity of links shared, and video analysis. Apparently it would utilise something similar, but apply weighting to certain words, images, and links to help escalate certain content and that the more the human moderators shot down certain content , the more it will prioritise similar content.

Hopefully these changes will make things easier for moderators and also help Facebook address harmful content faster. Sadly, it doesn’t do enough to then filter these guys off the internet entirely, but I guess that is perhaps a little too much of a pipedream for now.

Last Updated: November 17, 2020

HvR

November 17, 2020 at 18:16

Facebook Human Review in action

https://media.tenor.com/images/04ad1e7d566d90030ffb764997ee3216/tenor.gif