Today Nvidia announces the GeForce GTX 1660 Ti, a brand new card for the mid-range market that replaces both the outgoing GTX 1060 6GB and the GTX 1070 8GB. It does so without really pushing either card out the way because it lacks the additional 2GB of VRAM that the GTX 1070 boasts, but it’s just fast enough to make the GTX 1060 look like the worse deal. What makes it a weird product in Nvidia’s stack is the lack of Tensor cores for ray tracing, DLSS, and other features attached to the RTX family. It is essentially a GTX 1070 for less money.

Nvidia sees the GTX 1660 Ti being an attractive upgrade for a particular audience; anyone still running a GTX 960 or similar from the Maxwell family, and anyone running a GTX 1060 that wants more power. It’s a narrow market to play in because the starting price at retail for a GTX 1660 Ti is set at $279, which is $20 under the GTX 1060’s launch price and $100 under the GTX 1070’s launch price. The designs coming from Nvidia’s partners will surely push it up into the $300 range, at which point it’s no longer a mainstream product. If it launched closer to or under $250 I could see people making accommodations in their budget for it. Reviews of the card will bear out its true value for gamers looking for a good deal.

| GPU | GTX 1060 | GTX 1660 Ti | GTX 1070 |

| CUDA cores | 1280 | 1536 | 1920 |

| Base clock | 1506MHz | 1500MHz | 1506MHz |

| Boost clock | 1708MHz | 1770MHz | 1683MHz |

| FLOPS | 4.4 TFLOPS | 5.5 TFLOPS (11 TOPS effective) | 6.4 TFLOPS |

| TMUs | 80 | 96 | 120 |

| Memory bandwidth | 192 GB/s | 288GB/s | 256GB/s |

| Memory type | GDDR5 | GDDR6 | GDDR5 |

| TDP | 120W | 120W | 150W |

| Die size | 200mm² | 284mm² | 314mm² |

| Price (US $) | $299 | $279 | $379 |

Spec-wise, the GTX 1660 Ti is impressive. Manufactured on the same 12nm process at TSMC as the rest of the Turing family, it boasts 1536 CUDA cores with a base clock of 1500MHz and boost clocks of 1770MHz. Thanks to Turing’s ability to run floating-point and integer workloads together in the same clock, it effectively has 11 Tera-ops (TOPS) of compute power. On this point, Nvidia’s advertising is a little risky because the real-world figure is 5.5TFLOPs, a whole 900GFLOPS slower than the GTX 1070. It makes up for this deficit by improving slightly on some of the GTX 1070’s specifications. In real-world benchmarks we can therefore expect the GTX 1660 Ti to come out a wee bit slower on average than the GTX 1070, on the order of 5% or so.

Elsewhere in the specs sheet, the GTX 1660 Ti improves on the GTX 1070 with higher memory bandwidth (288GB/s versus 256GB/s) thanks to the use of GDDR6 memory, and it has a much lower thermal design power level, rated at 120W instead of 150W. The die is also definitely it’s own spin on the Turing architecture and not a cut-down version of the RTX 2060 as one would expect. The lack of Tensor and RT cores chops off over 100mm² of die space although it is still much larger – and more expensive to produce – than the GTX 1060 die. That additional 84mm² of silicon does add up.

The value of the GTX 1660 Ti compared to a GTX 1070 on a clearance sale, then, is longevity. One of Nvidia’s slides in its presentation included this weird chart where the GTX 1060’s baseline performance is the X-axis, and the GTX 1660 Ti’s performance lead over the GTX 1060 is represented as a scatter plot of relative performance in several games.

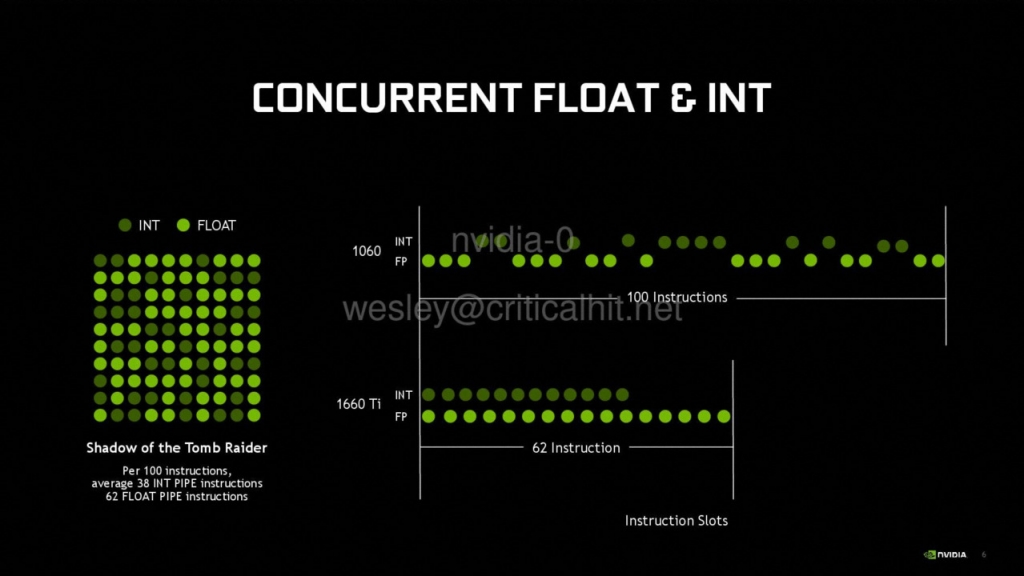

The Turing architecture can batch together integer and floating-point operations so that they run concurrently instead of as a series of workloads. Previously Nvidia’s GPUs would have to stop working momentarily to flip to the appropriate mode to complete the workload, and then quickly flip back to resume work in the previous mode. Older computers used to do something similar with interrupt-based architectures, where you’d stop the CPU in the middle of a job, demand that it receive and process [X] input, and then allow it to resume its previous job once the interrupt is complete. That’s how things worked before Turing. Now you can run those jobs in parallel without the required mode flipping, which is why Turing is faster than Pascal in compute workloads.

That means that as more games pile on asynchronous compute workloads that would have required the GPU to flip between modes constantly, that work is now more efficiently batched together. Newer games that pile on loads of physics effects and special shaders don’t bog down the GPU as much as they would older architectures, so the performance gap between the GTX 1060 and GTX 1660 Ti will continue to grow. By extension, that means the GTX 1660 Ti will also end up being faster than the GTX 1070 as time passes, with the older card no longer able to keep up in modern games utilising bleeding edge rendering techniques.

I’ve always maintained that the idea of “future-proofing” isn’t a realistic goal for any hardware component, but this may be the first example of it being possible. AMD fans have been memeing the idea of “Fine Wine” in AMD’s drivers for years now because optimisations to the drivers for the GCN architecture always seem to help older cards stay relevant and improve over time. The same may ring true for Nvidia as well. It’s a pity that the GTX 1660 Ti had to lose the RT and Tensor cores to drop to this price point, but the tradeoff is that you still get the efficiency boost from the instruction parallelism. That Nvidia felt the need to highlight this in their press briefing, I think, says something about how they think Turing has been portrayed in the media as being of poor value due to their target market to the higher cost.

The GeForce GTX 1660 Ti launches worldwide on 22 February 2019 with retail prices in the US starting at $279 for partner cards. Nvidia has no plans to produce a Founder’s Edition version of the card, giving their partners leeway to price their products slightly higher. There is no planned game bundle for the GTX 1660 Ti.

Last Updated: February 22, 2019

Steffmeister

February 25, 2019 at 11:40

Looking to upgrade from my GTX 960. Was looking at RTX 2060, but is just out of reach atm. This might be ideal.

Raptor Rants

February 25, 2019 at 08:18

So around R4k – R5k depding on economy at the time? That’s… actually not bad. Considering

Admiral Chief

February 25, 2019 at 09:12

Anyone wanna buy my 1060?