Intel’s next generation of graphics cores aren’t here yet, but that hasn’t stopped the company from talking a big game about them. Intel spoke a little more about the company’s plans for the GPUs in the next year at GDC19, and they even unveiled a few planned changes for the software side of things, showing off a new driver interface and settings menu. The company just pushed out a few slides with specs for their Gen11 family, and they’re clearly gunning for AMD and current-gen consoles.

In Intel’s 33-page PDF detailing the Gen 11 graphics family, which will debut with Ice Lake later this year, there are a lot of in-depth technical details about the architecture’s make-up and where Intel has optimised the design. Gen 11 isn’t the departure from Intel’s design that everyone has been hoping for. It’s very similar to Gen 9 shipping in the 9th Gen processor family, but there are some significant upgrades.

Intel Gen 11 GT2 Specifications

| Gen9 GT2 | Gen11 GT2 | Xbox One | |

| Execution/Compute Unit | 24 | 64 | 12 |

| GPU Sub-slices | 3 | 8 | N/A |

| Single-precision FLOPS | 384 FLOPS | 1 TFLOPS | 1.3 TFLOPS |

| Half-precision FLOPS | 768 FLOPS | 2 TFLOPS | 2.6 TFLOPS |

| Pixels/clock | 8 | 16 | 4 |

| Primitives/clock | 1 | 1 | 4 |

| Cache | 768KB | 3MB | 32MB+8GB |

| Memory support | LP-DDR3/DDR4 | LP-DDR4/DDR4 | DDR3 |

| IMC configuration | 2x 64-bit | 4x 32-bit | 8x 32-bit |

I’ve pulled these specs out of Intel’s table in their document and focused on the things that Critical Hit readers might be interested in. On paper, we’re looking at a big performance increase for Ice Lake’s graphics capability. GT2 is Intel’s codename for the mid-range configuration of the Gen 11 graphics you’ll find included in their processors, so a theoretical Core i3-109400 quad-core would launch with the Gen 11 GT2 configuration.

How much of a performance increase are we talking about? In just hardware alone, there’s 2.6x as many execution units, and 32-bit single-precision performance is at the 1 TFLOPS level. You can see how close it is on paper to the raw performance of Microsoft’s Xbox One console. It’s not that far off, is it? It’s also so close to AMD’s Radeon Vega 8 graphics that ship in their Ryzen 3 2200G, their cheapest quad-core APU, which has a 32-bit rating of 1.1 TFLOPS.

Despite the fact that GT2 is reserved for Intel’s mid-range chips, this is a good sign for their Pentium and Celeron lineups. Those will start with at least half the Gen11 GT2 performance, which will be a big boost for Intel’s budget chips. That should be enough to match AMD’s GPU performance in the super-cheap Athlon 200E.

Intel has also improved the pixel performance which should make the experience using 4K displays much more palatable, and the L3 cache increases as well. The Achilles heel of the architecture, its primitive per clock rate, will be the deciding factor in how close Intel is able to come to AMD’s performance. This is a measure of how many triangles the GPU can draw in one clock cycle, and AMD can do four times as many on the GCN architecture. As a reminder, Gen11 is probably Intel’s last architecture to have this issue. Moving forward, Raja Koduri’s team will no doubt address those bottlenecks to improve performance.

Where Intel will beat AMD is likely in idle power consumption and memory bandwidth. The separation of the memory controllers into four 32-bit units will mean that Intel can power-gate unused memory controllers when on battery power, and improved DDR4 and LP-DDR4 support means they can take advantage of faster memory kits above 2400MHz.

New Features!

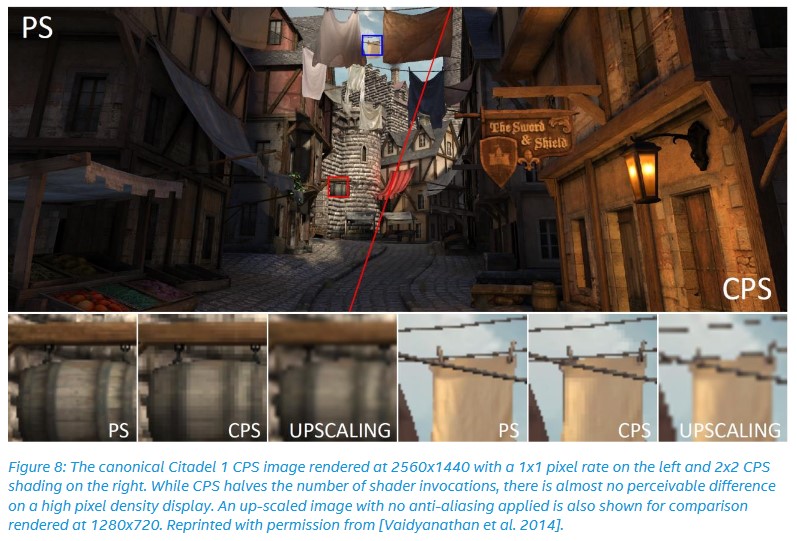

Feature-wise, Gen 11 will have some new tricks up its sleeve to save performance and hopefully give it an edge over AMD’s GPUs. The first is a new feature supported in hardware called Coarse Pixel Shading (CPS). The technique is similar to variable rate shading which is a part of Direct3D 12 and already support on Nvidia’s Turing graphics cards, but there are fundamental differences.

Variable rate shading is an attribute that developers add to their shaders when building an object to tell the GPU that a particular object or part of the display doesn’t need to have the same amount of detail all over. Parts of the object that have no change in the texture or any additional detail (or are moving too quickly to have additional detail) don’t have to be rendered in high quality, which saves the GPU from doing additional work that isn’t necessary.

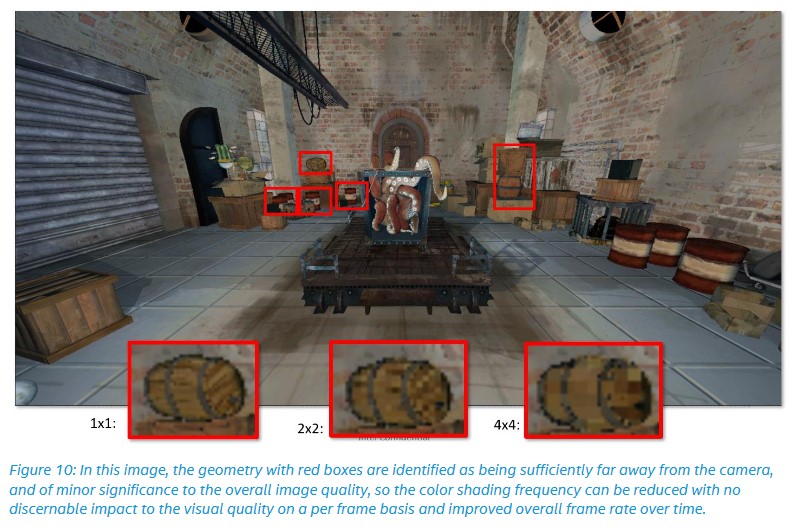

CPS works like that, but it doesn’t have to be done by developers manually. The feature is implemented as an API in games which can be retrofitted, and will reduce the resolution of parts of an image that are either too far away, or not immediately within the player’s near-field of view. Intel has a list of scenarios where it would be best used, but the three primary ones are: objects at a distance from the camera; objects in motion; and objects behind a blurring effect. Instead of rendering those objects at a high fidelity, the shaders are sampled to see if there are shaders which are executed more than once, and the results of those are re-used to build the 3D object in-game rather than re-do all that work multiple times. The performance increase may be small, but the power savings may be much larger.

Gen 11 also supports Intel’s implementation of VESA Adaptive-Sync to support variable refresh rates in monitors, as well as hardware support for panel self-refresh (PSR). PSR has been working on Intel’s hardware for more than a decade and was first demoed on a system in 2008, but displays that supported self-refresh technology weren’t widely available back then. Most of them only appeared in mobile phones and tablets (Nokia, primarily), where the options for saving power were much more limited. In Intel’s bid to save more power on laptops, support for PSR and variable refresh rates will see displays drop as low as 24Hz for video content, with power draws as low as one watt.

Intel’s Ice Lake family is expected to launch first in mobile form factors in late 2019. It will be the company’s first mass-market chip family produced on their 10nm production process, and will bring with it increases raw GPU performance.

Last Updated: March 26, 2019

Kromas

March 26, 2019 at 11:28

Comparing your brand new unreleased chip to that of an Xbone is kinda moot. How old is this console now?

Llama In The Rift

March 26, 2019 at 11:49

+- 6 freaking years old, ill just be waiting for any news on the dedicated GPU end…but by the look of things were basically getting whats already available, but what about the RAYS?!? is gonna be every GPU enthusiast question.