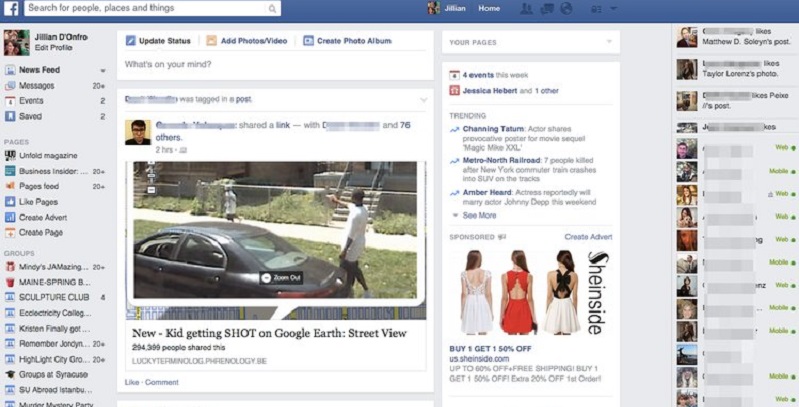

Facebook is trying hard to clean up its image (whether it can clean-up its act is another matter entirely) and in a new initiative will reportedly (via The Verge) be announcing a set of new updates designed to reduce the reach of harmful content across its platform. One of those biggest vectors of harmful content is “fake news” which Facebook is going to be tackling through the way it handles stories in its news feed.

First up is going to be effort from the company to measure the quality of publishers and whether they are legitimate, big publications or not with weighting ultimately going to these professional news sites in promotion on its new feed. At the same time, groups that are flagged for “repeatedly sharing misinformation” will also subsequently getting a lower weighting and therefore a lower distribution on the news feed (though strangely, still some form of promotion). It’s a similar strategy to how Google tends to rank its searches and you have to wonder why it’s taking them this long to put some sort of measure like this in place.

Facebook is also going to be making a great effort to actually fact-check these stories. Though rather than relying on some AI algorithm to figure this out, the company plans to team up with The Associated Press to start doing fact-checking on US related news and videos and include a Trust Indicator next to publications deemed legitimate. It’s not exactly clear if the company plans to follow these measures to other international communities, or stay focused purely on the US for now.

The changes are not purely restricted to the Facebook site and apps though, as the company also revealed that some features on WhatsApp designed to reduce misinformation are also coming to Messenger. Facebook says it’s already started to roll out forward indicators, to let people know when a message has been forwarded to them, and “context buttons,” so people can look up more details around information they’ve been sent.

In addition to making changes to limit the spread of misinformation, Facebook is also making some small changes designed to keep users safe. Those include:

- A more detailed blocking tool on Messenger

- Bringing verified profile badges to Messenger

- Letting people remove posts and comments from a group, even after leaving the group

- Adding more information around “Page quality,” including its “status with respect to clickbait”.

- Reducing the spread of content that doesn’t quite warrant a ban on Instagram (Facebook says “a sexually suggestive post” might be allowed to remain on followers’ feeds, but not appear in Explore)

- Increasing scrutiny of groups’ moderation when determining if a group is violating rules

These are all great initiatives by the company and will certainly help to give a lot of the news on the site more relevance. It’s great that the company has finally realised that, unfortunately, society cannot regular itself and is now starting to act on that. Sadly though you have to wonder if all of this is perhaps only due to the political pressure the company continues to face and it will remain to be seen if the company’s new efforts do result in a longer-term culture change in the organization.

Last Updated: April 11, 2019

Admiral Chief

April 11, 2019 at 11:28

Reporting things on Facebook is like trying to convince Geoff to try homeopathic medicine